Artificial light sources make our daily life convenient, but cause a severe problem called light pollution. We propose a novel system for efficient visualization of light pollution in the night sky. Numerous methods have been proposed for rendering the sky, but most of these focus on rendering of the daytime or the sunset sky where the sun is the only, or dominant light source. For the visualization of the light pollution, however, we must consider many city light sources on the ground, resulting in excessive computational cost. We address this problem by precomputing a set of intensity distributions for the sky illuminated by city light at various locations and with different atmospheric conditions. We apply a principal component analysis and fast Fourier transform to the precomputed distributions, allowing us to efficiently visualize the extent of the light pollution. Using this method, we can achieve one to two orders of magnitudes faster computation compared to a naive approach that simply accumulates the scattered intensity for each viewing ray. Furthermore, the fast computation allows us to interactively solve the inverse problem that determines the city light intensity needed to reduce light pollution. Our system provides the user with both a forward and inverse investigation tool for the study and minimization of light pollution.

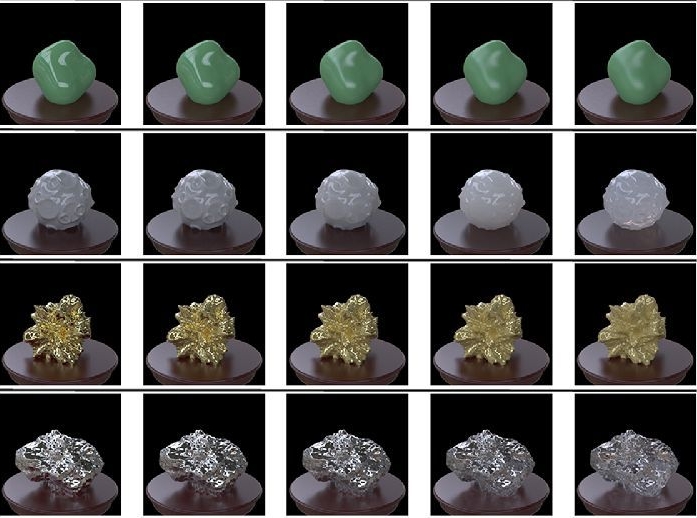

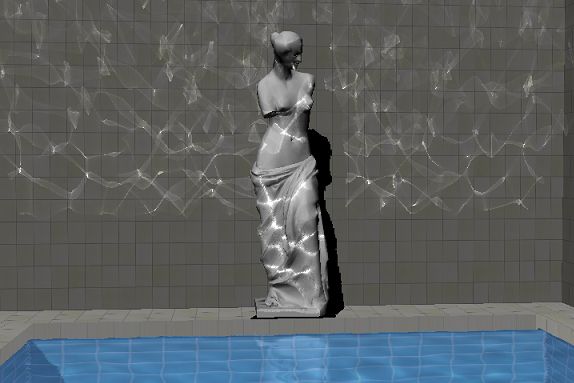

Complex visual processing involved in perceiving the object materials can be better elucidated by taking a variety of research approaches. Sharing stimulus and response data is an effective strategy to make the results of different studies directly comparable and can assist researchers with different backgrounds to jump into the field. Here, we constructed a database containing several sets of material images annotated with visual discrimination performance. We created the material images using physically based computer graphics techniques and conducted psychophysical experiments with them in both laboratory and crowdsourcing settings. The observer's task was to discriminate materials on one of six dimensions (gloss contrast, gloss distinctness of image, translucent vs. opaque, metal vs. plastic, metal vs. glass, and glossy vs. painted). The illumination consistency and object geometry were also varied. We used a nonverbal procedure (an oddity task) applicable for diverse use cases, such as cross-cultural, cross-species, clinical, or developmental studies. Results showed that the material discrimination depended on the illuminations and geometries and that the ability to discriminate the spatial consistency of specular highlights in glossiness perception showed larger individual differences than in other tasks. In addition, analysis of visual features showed that the parameters of higher order color texture statistics can partially, but not completely, explain task performance. The results obtained through crowdsourcing were highly correlated with those obtained in the laboratory, suggesting that our database can be used even when the experimental conditions are not strictly controlled in the laboratory. Several projects using our dataset are underway.

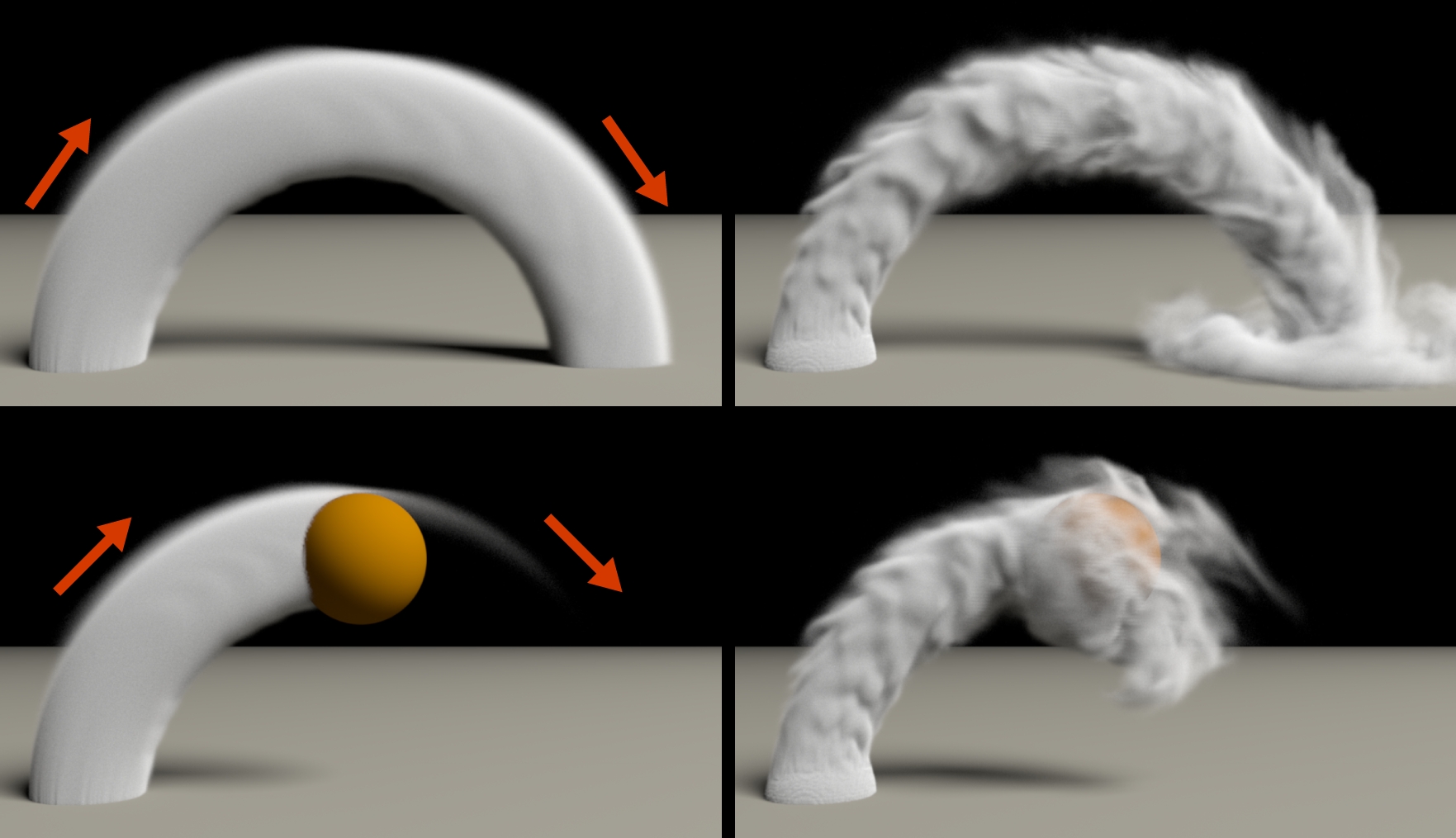

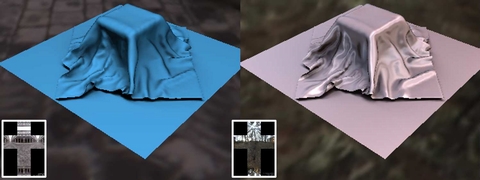

High-resolution fluid simulations are computationally expensive, so many post-processing methods have been proposed to add turbulent details to low-resolution flows. Guiding methods are one promising approach for adding naturalistic, detailed motions as a post-process, but can be inefficient. Thus, we propose a novel, efficient method that formulates fluid guidance as a minimization problem in stream function space. Input flows are first converted into stream functions, and a high resolution flow is then computed via optimization. The resulting problem sizes are much smaller than previous approaches, resulting in faster computation times. Additionally, our method does not require an expensive pressure projection, but still preserves mass. The method is both easy to implement and easy to control, as the user can control the degree of guiding with a single, intuitive parameter. We demonstrate the effectiveness of our method across various examples.

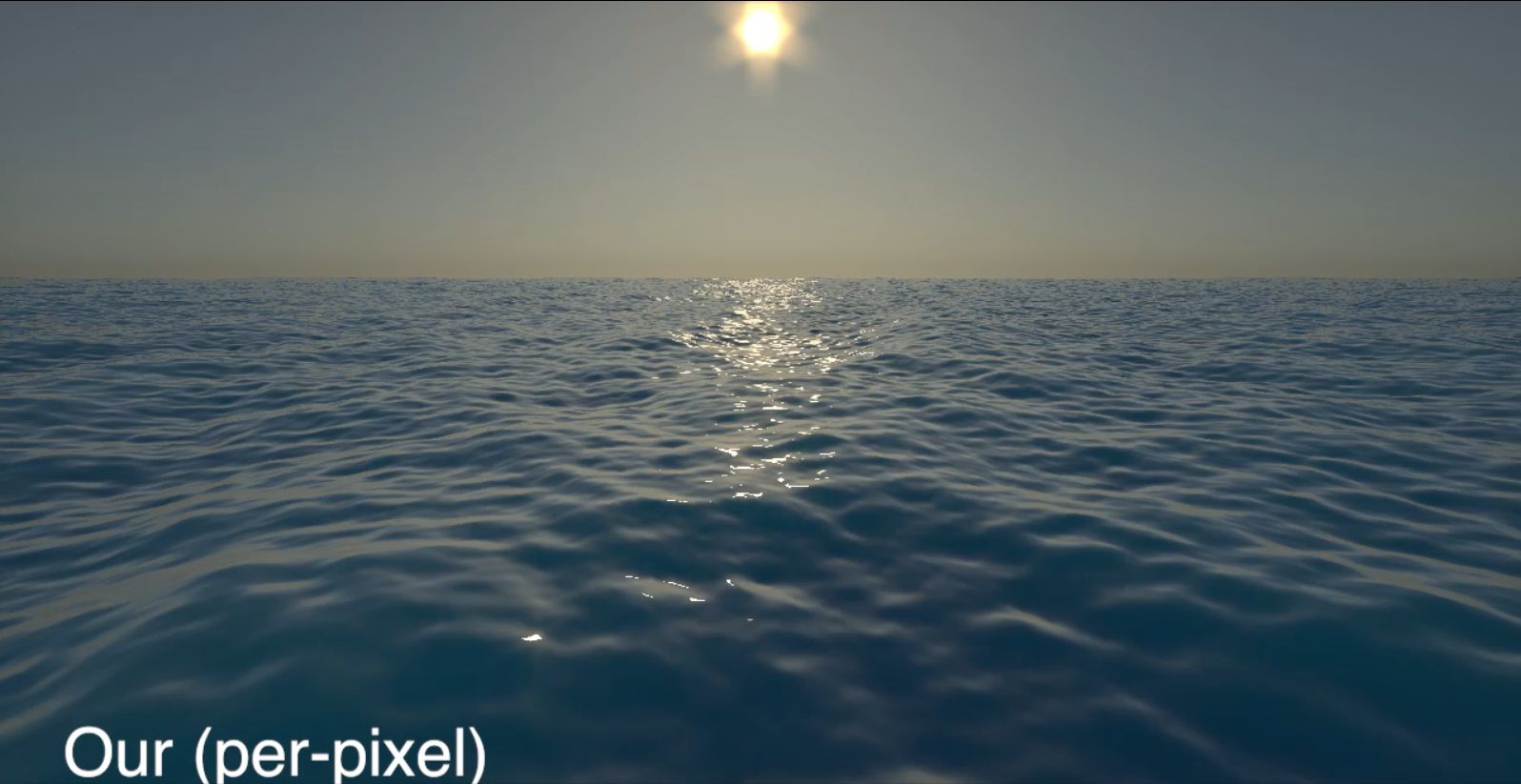

The reflection of a bright light source on a dynamic surface such as water with waves can be difficult to render well in real time due to reflection aliasing and flickering. In this paper, we propose a solution to this problem by approximating the reflection direction distribution for the water surface as an elliptical Gaussian distribution. Then we analytically integrate the reflection contribution throughout the rendering interval time. Our method can render in real time an animation of the time integrated reflection of a spherical light source on highly dynamic waves with reduced aliasing and flickering.

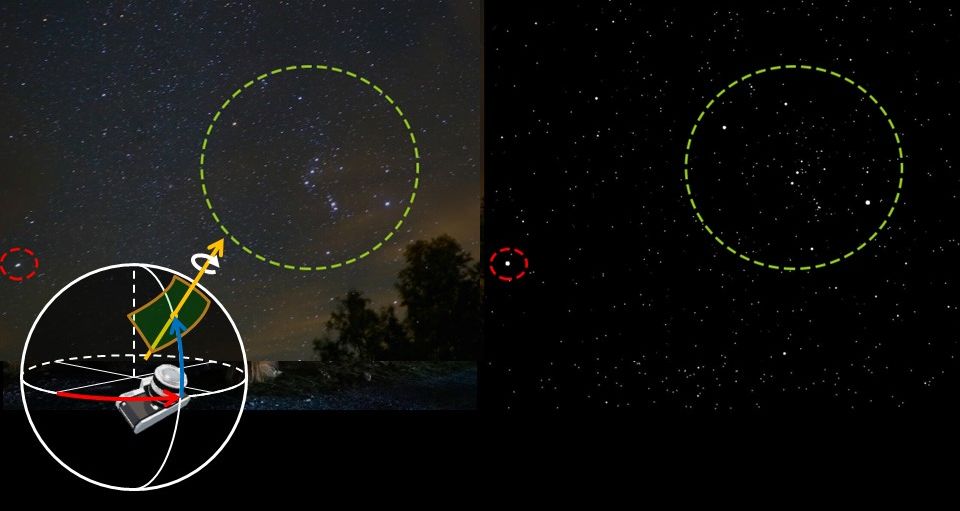

We propose an efficient, specific method for estimating camera parameters from a single starry night image. Such an image consists of a collection of disks representing stars, so traditional estimation methods for common pictures do not work. Our method uses a database, a star catalog, that stores the positions of stars on the celestial sphere. Our method computes magnitudes (i.e., brightnesses) of stars in the input image and uses them to find the corresponding stars in the star catalog. Camera parameters can then be estimated by a simple geometric calculation. Our method is over ten times faster and more accurate than a previous method.

Recent advances in bidirectional path tracing (BPT) reveal that the use of multiple light sub-paths and the resampling of a small number of these can improve the efficiency of BPT. By increasing the number of pre-sampled light sub-paths, the possibility of generating light paths that provide large contributions can be better explored and this can alleviate the correlation of light paths due to the reuse of pre-sampled light sub-paths by all eye sub-paths. The increased number of pre-sampled light sub- paths, however, also incurs a high computational cost. In this paper, we propose a two-stage resampling method for BPT to efficiently handle a large number of pre-sampled light sub-paths. We also derive a weighting function that can treat the changes in path probability due to the two-stage resampling. Our method can handle a two orders of magnitude larger number of pre- sampled light sub-paths than previous methods in equal-time rendering, resulting in stable and better noise reduction than state-of-the-art methods.

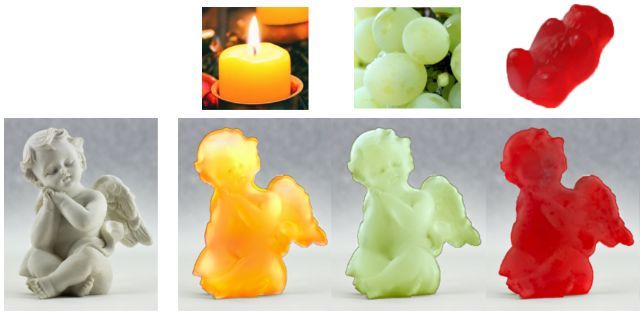

This paper introduces an image-based material transfer framework which only requires single input and reference images as an ordinary color transfer method. In contrast to previous material transfer methods, we focus on transferring the appearances of translucent objects. There are two challenging problems in such material transfer for translucent objects. First, the appearances of translucent materials are characterized by not only colors but also their spatial distribution. Unfortunately, the traditional color transfer methods can hardly handle the translucency because they only consider the colors of the objects. Second, temporal coherency in the transferred results cannot be handled by the traditional methods and furthermore by recent neural style transfer methods, as we demonstrated in this paper. To address these problems, we propose a novel image-based material transfer method based on the analysis of spatial color distribution. We focus on ``subbands,'' which represent multi-scale image structures, and find that the correlation between color distribution and subbands is a key feature for reproducing the appearances of translucent materials. Our method relies on a standard principal component analysis (PCA), which harmonizes the correlation of input and reference images to reproduce the translucent appearance. Considering the spatial color distribution in the input and reference images, our method can be naturally applied to video sequences in a frame-by-frame manner without any additional pre-/post-process. Through experimental analyses, we demonstrate that the proposed method can be applied to a broad variety of translucent materials, and their resulting appearances are perceptually similar to those of the reference images.

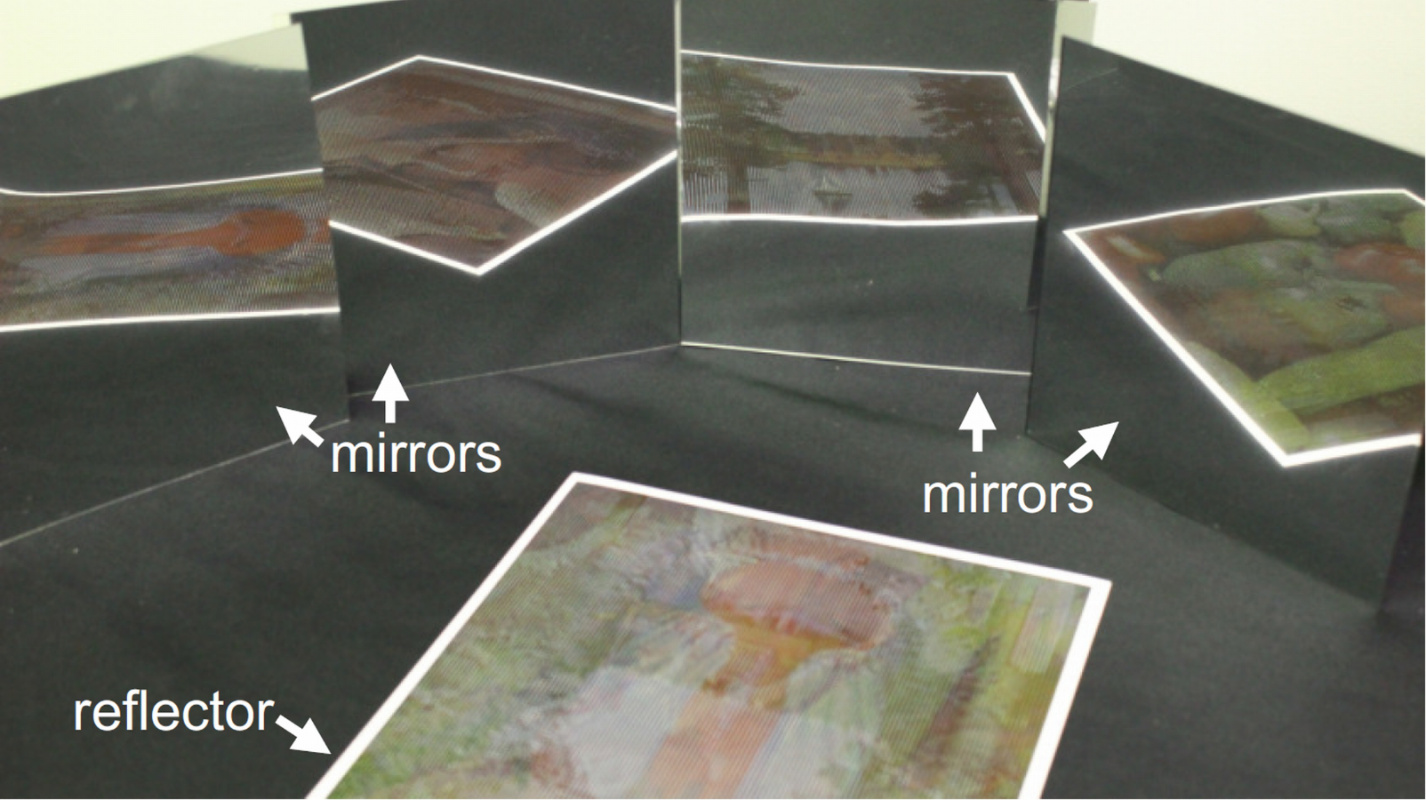

A great deal of attention has been devoted to the fabrication of reflectors that can display different color images when viewed from different directions not only in industry but also for the arts. Although such reflectors have previously been successfully fabricated, the number of images displayed has been limited to two or suffer from ghosting artifacts where mixed images appear. Furthermore, the previous methods need special hardware and/or materials to fabricate the reflectors. Thus, those techniques are not suitable for printing reflectors on everyday personal objects made of different materials, such as name cards, letter sheets, envelopes, and plastic cases. To overcome these limitations, we propose a method for fabricating reflectors using a standard ultraviolet printer (UV printer). UV printer can render a specified 2D color pattern on an arbitrary material and by overprinting the printed pattern can be raised, that is, the printed pattern becomes a microstructure having color and height. We propose using these microstructures to formulate a method for designing spatially varying reflections that can display different target images when viewed from different directions. The microstructure is calculated by minimizing an objective function that measures the differences between the intensities of the light reflected from the reflector and that of the target image. We show several fabricated reflectors to demonstrate the usefulness of the proposed method.

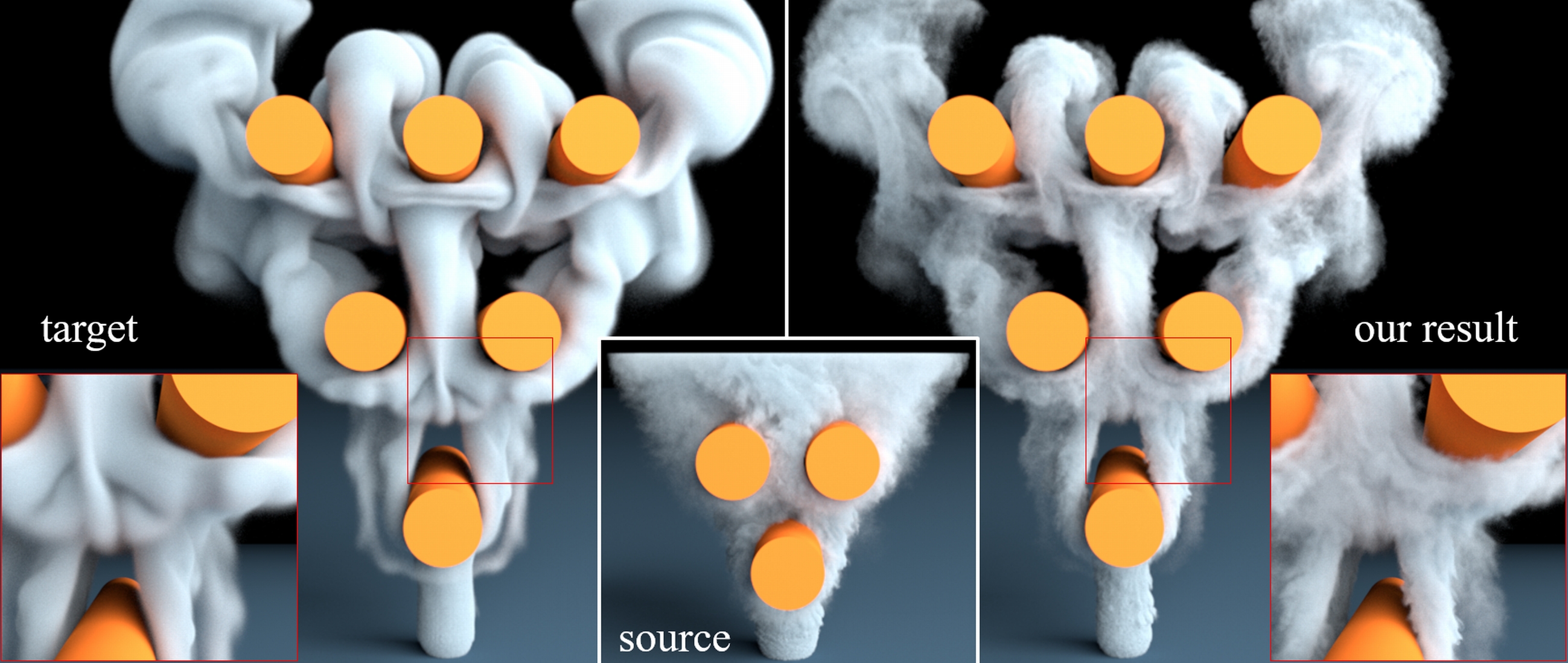

Generating realistic fluid simulations remains computationally expensive, and animators can expend enormous effort trying to achieve a desired motion. To reduce such costs, several methods have been developed in which high-resolution turbulence is synthesized as a post process. Since global motion can then be obtained using a fast, low-resolution simulation, less effort is needed to create a realistic animation with the desired behavior. While much research has focused on accelerating the low-resolution simulation, the problem controlling the behavior of the turbulent, high-resolution motion has received little attention. In this paper, we show that style transfer methods from image editing can be adapted to transfer the turbulent style of an existing fluid simulation onto a new one. We do this by extending example-based image synthesis methods to handle velocity fields using a combination of patch-based and optimization-based texture synthesis. This approach allows us to take into account the incompressibility condition, which we have found to be a important factor during synthesis. Using our method, a user can easily and intuitively create high-resolution fluid animations that have a desired turbulent motion.

The computational cost for creating realistic fluid animations by numerical simulation is generally expensive. In digital production environments, existing precomputed fluid animations are often reused for different scenes in order to reduce the cost of creating scenes containing fluids. However, applying the same animation to different scenes often produces unacceptable results, so the animation needs to be edited. In order to help animators with the editing process, we develop a novel method for synthesizing the desired fluid animations by combining existing flow data. Our system allows the user to place flows at desired positions, and combine them. We do this by interpolating velocities at the boundaries between the flows. The interpolation is formulated as a minimization problem of an energy function, which is designed to take into account the inviscid, incompressible Navier-Stokes equations. Our method focuses on smoke simulations defined on a uniform grid. We demonstrate the potential of our method by showing a set of examples, including a large-scale sandstorm created from a few flow data simulated in a small-scale space.

In this paper, we present a three-dimensional (3D) digitization technique for natural objects, such as insects and plants. The key idea is to combine X-ray computed tomography (CT) and photographs to obtain both complicated 3D shapes and surface textures of target specimens. We measure a specimen by using an X-ray CT device and a digital camera to obtain a CT volumetric image (volume) and multiple photographs. We then reconstruct a 3D model by segmenting the CT volume and generate a texture by projecting the photographs onto the model. To achieve this reconstruction, we introduce a technique for estimating a camera position for each photograph. We also present techniques for merging multiple textures generated from multiple photographs and recovering missing texture areas caused by occlusion. We illustrate the feasibility of our 3D digitization technique by digitizing 3D textured models of insects and flowers. The combination of X-ray CT and a digital camera makes it possible to successfully digitize specimens with complicated 3D structures accurately and allows us to browse both surface colors and internal structures.

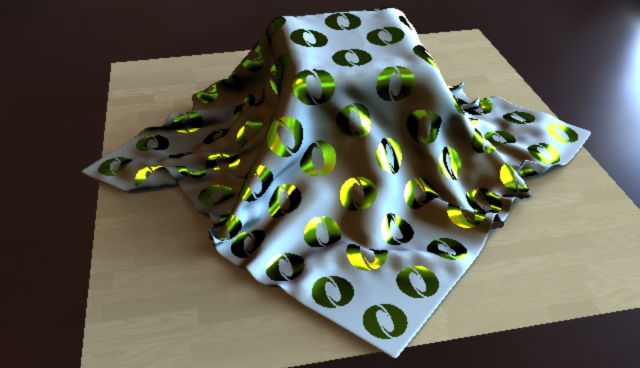

This paper proposes an inverse approach for modeling the appearance of interwoven cloth. Creating the desired appearance in cloth is difficult because many factors, such as the type of thread and the weaving pattern, have to be considered. Design tools that enable the desired visual appearance of the cloth to be replicated are therefore beneficial for many computer graphics applications. In this paper, we focus on the design of the appearance of interwoven cloth whose reflectance properties are significantly affected by the weaving patterns. Although there are several systems that support editing of weaving patterns, they lack an inverse design tool that automatically determines the spatially-varying bi-directional reflectance distribution function (BRDF) from the weaving patterns required to make the cloth display the desired appearance. We propose a method for computing the cloth BRDFs that can be used to display the desired image provided by the user. We formulate this problem as a cost minimization and solve it by computing the shortest path of a graph. We demonstrate the effectiveness of the method with several examples.

We present a system that automatically suggests the furniture layout when one moves into a new house, taking into account the furniture layout in the previous house. In our method, the input to our system comprises the floor plans of the previous and new houses, and the furniture layout in the previous house. The furniture layout for the whole house is suggested. This method builds on a previous furniture layout method with which the furniture layout for a single room only is computed. In this paper, we propose a new method that can suggest the furniture layout for multiple rooms in the new house. To deal with this problem, we took a heuristic approach in developing a cost function by adding some new cost functions to the previous method. We show various layouts computed using our method, which demonstrate the effectiveness of it.

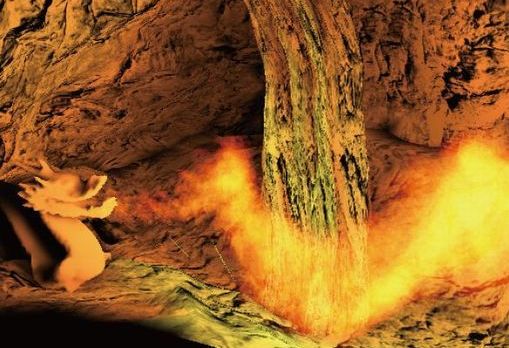

Visual simulation of fire plays an important role in many applications, such as movies and computer games. In these applications, artists are often requested to synthesize realistic fire with a particular behavior. To meet such requirement, we present a feedback control method for fire simulations. The user can design the shape of fire by placing a set of control points. Our method generates a force field and automatically adjusts a temperature at a fire source, based on user specified control points. Experimental results show that our method can control the fire shape.

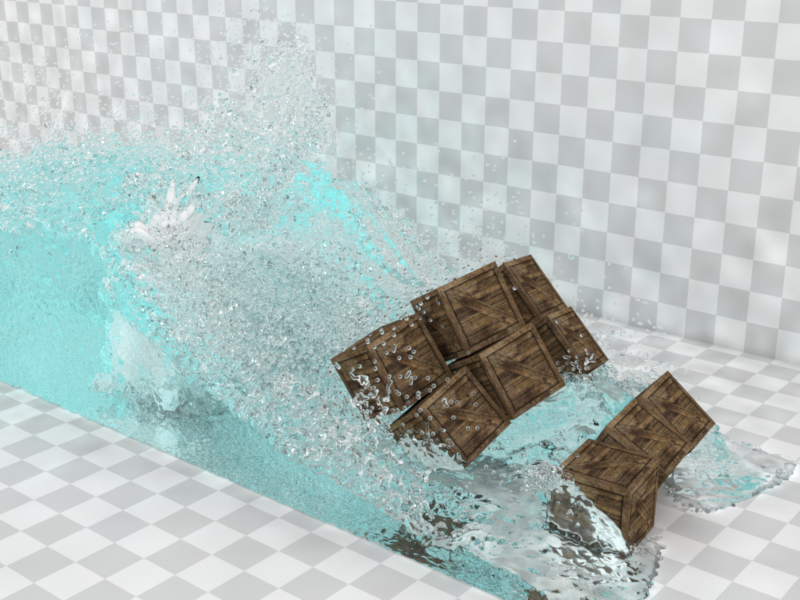

We propose a hybrid Smoothed Particle Hydrodynamics solver for efficiently simulating incompressible fluids using an interface handling method for boundary conditions in the pressure Poisson equation. We blend particle density computed with one smooth and one spiky kernel to improve the robustness against both fluid-fluid and fluid-solid collisions. To further improve the robustness and efficiency, we present a new interface handling method consisting of two components: free surface handling for Dirichlet boundary conditions and solid boundary handling for Neumann boundary conditions. Our free surface handling appropriately determines particles for Dirichlet boundary conditions using Jacobi-based pressure prediction while our solid boundary handling introduces a new term to ensure the solvability of the linear system. We demonstrate that our method outperforms the state-of-the-art particle-based fluid solvers.

We present a system to quickly and easily create an animation of water scenes in a single image. Our method relies on a database of videos of water scenes and video retrieval technique. Given an input image, alpha masks specifying regions of interest, and sketches specifying flow directions, our system first retrieves appropriate video candidates from the database and create the candidate animations for each region of interest as the composite of the input image and the retrieved videos: this process spends less than one minute by taking advantage of parallel distributed processing. Our system then allows the user to interactively control the speed of the desired animation and select the appropriate animation. After selecting the animation for all the regions, the resulting animation is completed. Finally, the user optionally applies a texture synthesis algorithm to recover the appearance of the input image. We demonstrate that our system allows the user to create a variety of animations of water scenes.

The popularity of many-light rendering, which converts complex global illumination computations into a simple sum of the illumination from virtual point lights (VPLs), for predictive rendering has increased in recent years. A huge number of VPLs are usually required for predictive rendering at the cost of extensive computational time. While previous methods can achieve significant speedup by clustering VPLs, none of these previous methods can estimate the total errors due to clustering. This drawback imposes on users tedious trial and error processes to obtain rendered images with reliable accuracy. In this paper, we propose an error estimation framework for many-light rendering. Our method transforms VPL clustering into stratified sampling combined with confidence intervals, which enables the user to estimate the error due to clustering without the costly computing required to sum the illumination from all the VPLs. Our estimation framework is capable of handling arbitrary BRDFs and is accelerated by using visibility caching, both of which make our method more practical. The experimental results demonstrate that our method can estimate the error much more accurately than the previous clustering method.

Fast realistic rendering of objects in scattering media is still a challenging topic in computer graphics. In presence of participating media, a light beam is repeatedly scattered by media particles, changing direction and getting spread out. Explicitly evaluating this beam distribution would enable efficient simulation of multiple scattering events without involving costly stochastic methods. Narrow beam theory provides explicit equations that approximate light propagation in a narrow incident beam. Based on this theory, we propose a closed-form distribution function for scattered beams. We successfully apply it to the image synthesis of scenes in which scattering occurs, and show that our proposed estimation method is more accurate than those based on the Wentzel-Kramers-Brillouin (WKB) theory.

Furniture layout design is a challenging problem, and several methods have recently beenproposed. Although the lighting environment in a room has a strong relationship with the furniture functionality, the previous methods completely overlooked it in designing furniture layout. This paper addresses this problem; we propose an efficient method for computing furniture layout taking into account the lighting environment. We propose a new cost function that evaluates the lighting environment taking into account inter-reflections of light. A fast method for evaluating the cost function is also proposed. We demonstrate that our method improves the quality and usability of furniture layout by taking into account the lighting environment.

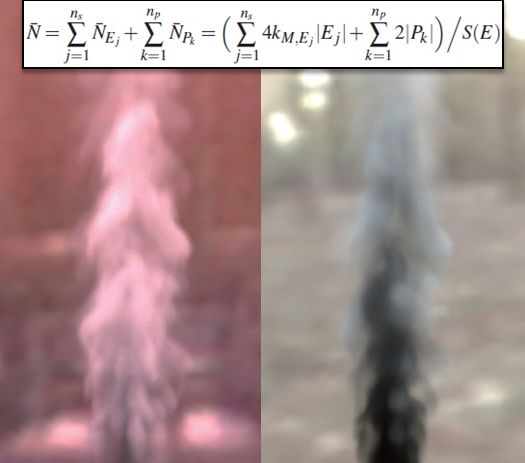

We propose a method of three-dimensional (3D) modeling of volumetric fluid phenomena from sparse multi-view images (e.g., only a single-view input or a pair of front- and side-view inputs). The volume determined from such sparse inputs using previous methods appears blurry and unnatural with novel views; however, our method preserves the appearance of novel viewing angles by transferring the appearance information from input images to novel viewing angles. For appearance information, we use histograms of image intensities and steerable coefficients. We formulate the volume modeling as an energy minimization problem with statistical hard constraints, which is solved using an expectation maximization (EM)-like iterative algorithm. Our algorithm begins with a rough estimate of the initial volume modeled from the input images, followed by an iterative process whereby we first render the images of the current volume with novel viewing angles. Then, we modify the rendered images by transferring the appearance information from the input images, and we thereafter model the improved volume based on the modified images. We iterate these operations until the volume converges. We demonstrate our method successfully provides natural-looking volume sequences of fluids (i.e., fire, smoke, explosions, and a water splash) from sparse multi-view videos. To create production-ready fluid animations, we further propose a method of rendering and editing fluids using a commercially available fluid simulator.

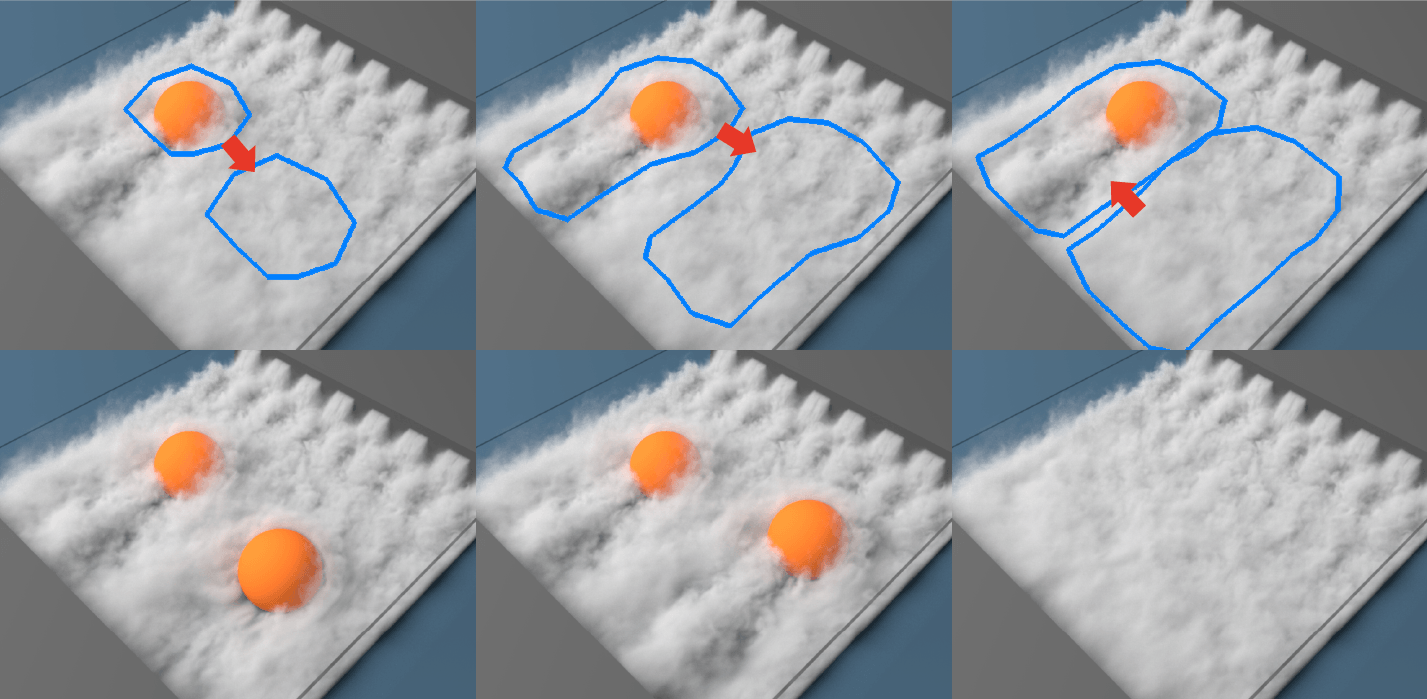

Physically-based Fluid simulations usually require expensive computation cost for creating realistic animations. We present a technique that allows the user to create various Fluid animations from an input Fluid animation sequence, without the need for repeatedly performing simulations. Our system allows the user to deform the Flow Field in order to edit the overall Fluid behavior. In order to maintain plausible physical behavior, we ensure the incompressibility to guarantee the mass conservation. We use a vector potential for representing the flow fields to realize such incompressibility-preserving deformations. Our method First computes (time-varying) vector potentials from the input velocity Field sequence. Then, the user deforms the vector potential, and the system computes the deformed velocity Field by taking the curl operator on the vector potential. The incompressibility is thus obtained by construction. We show various examples to demonstrate the usefulness of our method.

Importance sampling of virtual point lights (VPLs) is an efficient method for computing global illumination. The key to importance sampling is to construct the probability function, which is used to sample the VPLs, such that it is proportional to the distribution of contributions from all the VPLs. Importance caching records the contributions of all the VPLs at sparsely distributed cache points on the surfaces and the probability function is calculated by interpolating the cached data. Importance caching, however, distributes cache points randomly, which makes it difficult to obtain probability functions proportional to the contributions of VPLs where the variation in the VPL contribution at nearby cache points is large. This paper proposes an adaptive cache insertion method for VPL sampling. Our method exploits the spatial and directional correlations of shading points and surface normals to enhance the proportionality. The method detects cache points that have large variations in their contribution from VPLs and inserts additional cache points with a small overhead. In equal-time comparisons including cache point generation and rendering, we demonstrate that the images rendered with our method are less noisy compared to importance caching.

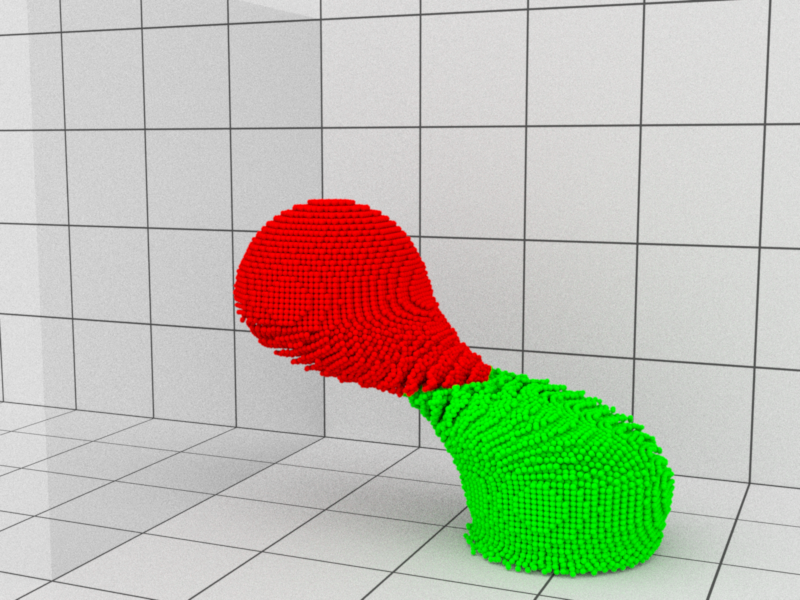

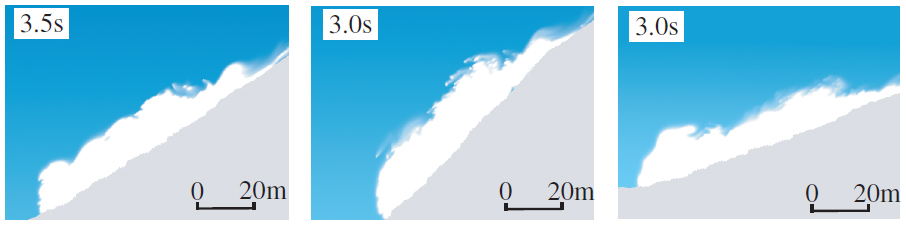

In this paper, we propose a method for the simulation of clouds using particles exclusively. The method is based on Position Based Fluids, which simulates fluids using position constraints. To reduce the simulation time, we?fve used adaptive splitting and merging to concentrate computation on regions where it is most needed. When clouds are formed, particles are split so as to add more detail to the generated cloud surface and when they disappear, particles are merged back. We implement our adaptive method on the GPU to accelerate the computation. While the splitting portion is easily parallelizable, the merge portion is not. We develop a simple algorithm to address this problem and achieve reasonable simulation times.

We propose a stable and efficient particle-based method for simulating highly viscous fluids that can generate coiling and buckling phenomena and handle variable viscosity. In contrast to previous methods that use explicit integration, our method uses an implicit formulation to improve the robustness of viscosity integration, therefore enabling use of larger time steps and higher viscosities. We use Smoothed Particle Hydrodynamics to solve the full form of viscosity, constructing a sparse linear system with a symmetric positive definite matrix, while exploiting the variational principle that automatically enforces the boundary condition on free surfaces. We also propose a new method for extracting coefficients of the matrix contributed by second-ring neighbor particles to efficiently solve the linear system using a conjugate gradient solver. Several examples demonstrate the robustness and efficiency of our implicit formulation over previous methods and illustrate the versatility of our method.

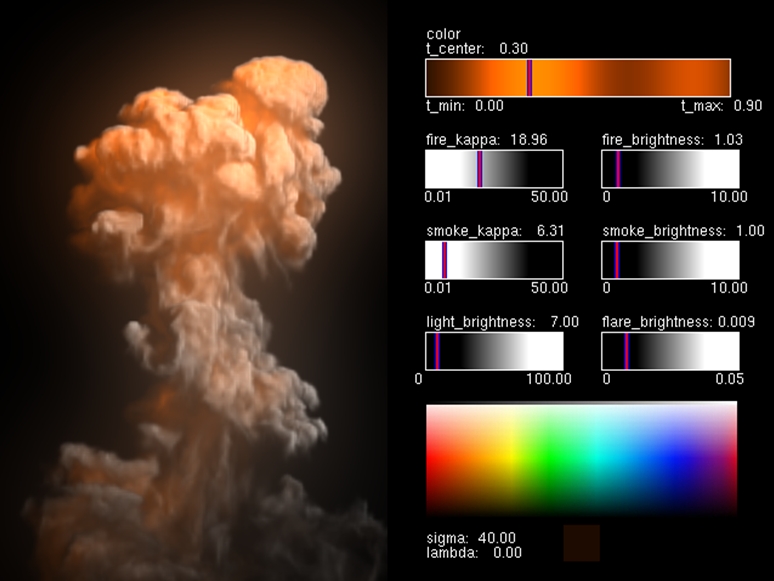

Synthetic volumetric fire and explosions are important visual effects used in many applications such as computer games and movies. For these applications, artists are often requested to achieve the desired visual appearance by adjusting some parameters for rendering them. However, this is extremely difficult and tedious, due to the complexity of these phenomena and the expensive computational cost for the rendering. This paper presents an interactive system that assists this adjustment process. Our method allows the user to interactively change the ratio of smoke and flame regions, the emissivity of the flame, and the optical thicknesses of the smoke and flame separately. The image is updated in real-time while the user modifies these parameters, taking into account the multiple scattering of light. We demonstrate the usefulness of our method by applying our method to editing of the visual appearances of fire and explosions

We propose a particle-based hybrid method for simulating volume preserving viscoelastic fluids with large deformations. Our method combines Smoothed Particle Hydrodynamics (SPH) and Position-based Dynamics (PBD) to approximate the dynamics of viscoelastic fluids. While preserving their volumes using SPH, we exploit an idea of PBD and correct particle velocities for viscoelastic effects not to negatively affect volume preservation of materials. To correct particle velocities and simulate viscoelastic fluids, we use connections between particles which are adaptively generated and deleted based on the positional relations of the particles. Additionally, we weaken the effect of velocity corrections to address plastic deformations of materials. For one-way and two-way fluid-solid coupling, we incorporate solid boundary particles into our algorithm. Several examples demonstrate that our hybrid method can sufficiently preserve fluid volumes and robustly and plausibly generate a variety of viscoelastic behaviors, such as splitting and merging, large deformations, and Barus effect.

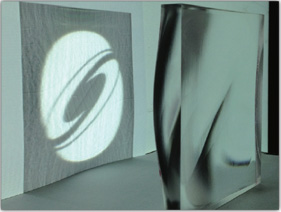

We present a technique for computing the shape of a transparent object that can generate user-defined caustic patterns. The surface of the object generated using our method is smooth. Thanks to this property, the resulting caustic pattern is smooth, natural, and highly detailed compared to the results obtained using previous methods. Our method consists of two processes. First, we use a differential geometry approach to compute a smooth mapping between the distributions of the incident light and the light reaching the screen. Second, we utilize this mapping to compute the surface of the object. We solve Poisson's equation to compute both the mapping and the surface of the object.

This paper proposes an interactive rendering method of cloth fabrics under environment lighting. The outgoing radiance from cloth fabrics in the microcylinder model is calculated by integrating the product of the distant environment lighting, the visibility function, the weighting function that includes shadowing/masking effects of threads, and the light scattering function of threads. The radiance calculation at each shading point of the cloth fabrics is simplified to a linear combination of triple product integrals of two circular Gaussians and the visibility function, multiplied by precomputed spherical Gaussian convolutions of the weighting function. We propose an efficient calculation method of the triple product of two circular Gaussians and the visibility function by using the gradient of signed distance function to the visibility boundary where the binary visibility changes in the angular domain of the hemisphere. Our GPU implementation enables interactive rendering of static cloth fabrics with dynamic viewpoints and lighting. In addition, interactive editing of parameters for the scattering function (e.g. thread?fs albedo) that controls the visual appearances of cloth fabrics can be achieved.

Divide-and-conquer ray tracing (DACRT) methods solve intersection problems between large numbers of rays and primitives by recursively subdividing the problem size until it can be easily solved. Previous DACRT methods subdivide the intersection problem based on the distribution of primitives only, and do not exploit the distribution of rays, which results in a decrease of the rendering performance especially for high resolution images with antialiasing. We propose an efficient DACRT method that exploits the distribution of rays by sampling the rays to construct an acceleration data structure. To accelerate ray traversals, we have derived a new cost metric which is used to avoid inefficient subdivision of the intersection problem where the number of rays is not sufficiently reduced. Our method accelerates the tracing of many types of rays (primary rays, less coherent secondary rays, random rays for path tracing) by a factor of up to 2 using ray sampling.

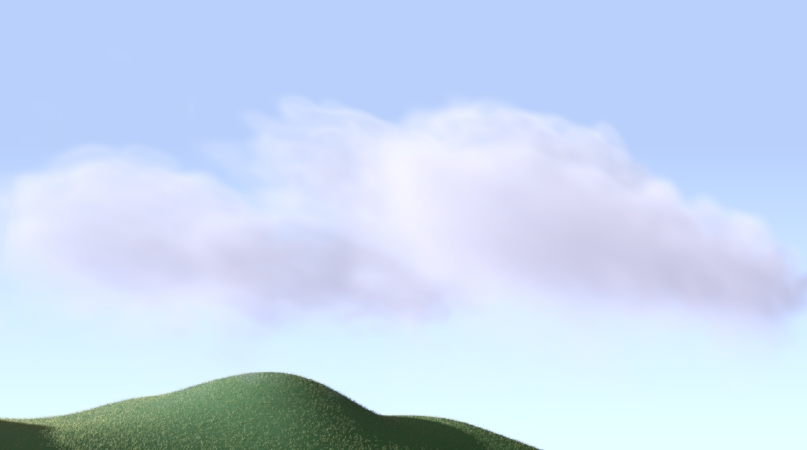

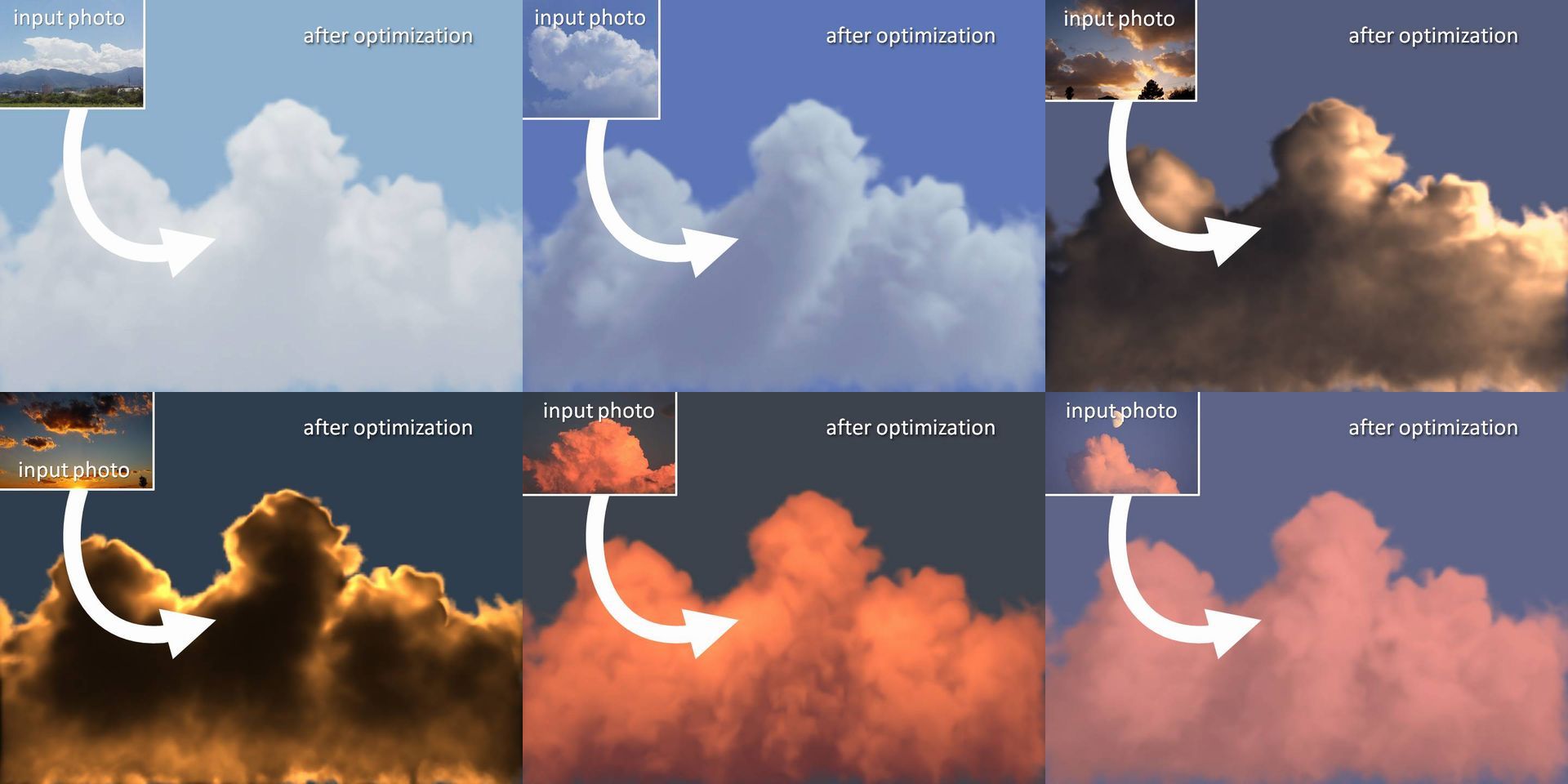

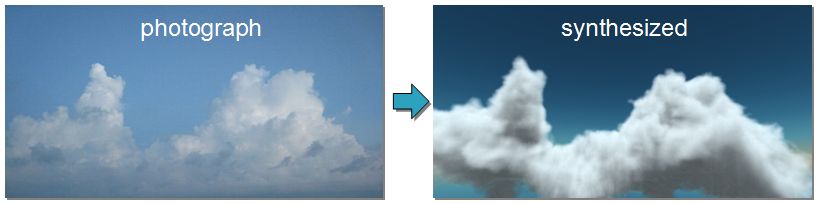

Clouds play an important role in creating realistic images of outdoor scenes. Many methods have therefore been proposed for displaying realistic clouds. However, the realism of the resulting images depends on many parameters used to render them and it is often difficult to adjust those parameters manually. This paper proposes a method for addressing this problem by solving an inverse rendering problem: given a non-uniform synthetic cloud density distribution, the parameters for rendering the synthetic clouds are estimated using photographs of real clouds. The objective function is defined as the difference between the color histograms of the photograph and the synthetic image. Our method searches for the optimal parameters using genetic algorithms. During the search process, we take into account the multiple scattering of light inside the clouds. The search process is accelerated by precomputing a set of intermediate images. After ten to twenty minutes of precomputation, our method estimates the optimal parameters within a minute.

We present a new technique for bi-scale material editing using Spherical Gaussians (SGs). To represent large-scale appearances, an effective BRDF that is the average reflectance of small-scale details is used. The effective BRDF is calculated from the integral of the product of the Bidirectional Visible Normal Distribution (BVNDF) and BRDFs of small-scale geometry. Our method represents the BVNDF with a sum of SGs, which can be calculated on-the-fly, enabling interactive editing of small-scale geometry. By representing small-scale BRDFs with a sum of SGs, effective BRDFs can be calculated analytically by convolving the SGs for BVNDF and BRDF. We propose a new SG representation based on convolution of two SGs, which allows real-time rendering of effective BRDFs under all-frequency environment lighting and real-time editing of small-scale BRDFs. In contrast to the previous method, our method does not require extensive precomputation time and large volume of precomputed data per single BRDF, which makes it possible to implement our method on a GPU, resulting in real-time rendering.

We propose an efficient rendering method for dynamic scenes under all-frequency environmental lighting. To render the surfaces of objects illuminated by distant environmental lighting, the triple product of the lighting, the visibility function and the BRDF is integrated at each shading point on the surfaces. Our method represents the environmental lighting and the BRDF with a linear combination of spherical Gaussians, replacing the integral of the triple product with the sum of the integrals of spherical Gaussians over the visible region of the hemisphere. We propose a new form of spherical Gaussian, the integral spherical Gaussian, that enables the fast and accurate integration of spherical Gaussians with various sharpness over the visible region on the hemisphere. The integral spherical Gaussian simplifies the integration to a sum of four pre-integrated values, which are easily evaluated on-the-fly. With a combination of a set of spheres to approximate object geometries and the integral spherical Gaussian, our method can render object surfaces very efficiently. Our GPU implementation demonstrates real-time rendering of dynamic scenes with dynamic viewpoints, lighting, and BRDFs.

Synthetic images of outdoor scenes generated by computer graphics usually contain the sky as background. The appearance of the sky plays an important role in enhancing the reality of scenes. In this paper, we propose an intuitive and interactive system for synthesizing sky images that can reflect the user's intention. Our system consists of two steps. The first step is a sky generation step. The luminance distribution and colors of the sky are generated using our intuitive user interface. The second step is a cloud composition step. We have prepared a database of photographs of real clouds. Our system searches the database for cloud photographs that are suitable for the synthesized sky. The user selects the clouds in the search results and they are composited into the sky image. By using our system, the user can easily generate desired sky images.

Photo-realistic rendering of inhomogeneous participating media with light scattering in consideration is important in computer graphics, and is typically computed using Monte Carlo based methods. The key technique in such methods is the free path sampling, which is used for determining the distance (free path) between successive scattering events. Recently, it has been shown that efficient and unbiased free path sampling methods can be con- structed based on Woodcock tracking. The key concept for improving the efficiency is to utilize space partitioning (e.g., kd-tree or uniform grid), and a better space partitioning scheme is important for better sampling efficiency. Thus, an estimation framework for investigating the gain in sampling efficiency is important for determining how to partition the space. However, currently, there is no estimation framework that works in 3D space. In this paper, we propose a new estimation framework to overcome this problem. Using our framework, we can analytically estimate the sampling efficiency for any typical partitioned space. Conversely, we can also use this estimation framework for determining the optimal space partitioning. As an application, we show that new space partition- ing schemes can be constructed using our estimation framework. Moreover, we show that the differences in the performances using different schemes can be predicted fairly well using our estimation framework.

In this paper, we propose a real-time animation method for dynamic clouds illuminated by sunlight and skylight with multiple scattering. In order to create animations of outdoor scenes, it is necessary to render time-varying dynamic clouds. However, the simulation and the radiance calculation of dynamic clouds are computationally expensive. In order to address this problem, we propose an efficient method to create endless animations of dynamic clouds. The proposed method prepares a database of dynamic clouds consisting of a finite number of volume data. Using this database, volume data for the endless animation is generated at run time using the concept of Video Textures, and this data is rendered in real-time using GPU.

Realistic rendering of participating media is one of the major subjects in computer graphics. Monte Carlo techniques are widely used for realistic rendering because they provide unbiased solutions, which converge to exact solutions. Methods based on Monte Carlo techniques generate a number of light paths, each of which consists of a set of randomly selected scattering events. Finding a new scattering event requires free path sampling to determine the distance from the previous scattering event, and is usually a timeconsuming process for inhomogeneous participating media. To address this problem, we propose an adaptive and unbiased sampling technique using kd-tree based space partitioning. A key contribution of our method is an automatic scheme that partitions the spatial domain into sub-spaces (partitions) based on a cost model that evaluates the expected sampling cost. The magnitude of performance gain obtained by our method becomes larger for more inhomogeneous media, and rises to two orders compared to traditional free path sampling techniques.

We propose a simple method for modeling clouds from a single photograph. Our method can synthesize three types of clouds: cirrus, altocumulus, and cumulus. We use three different representations for each type of cloud: two-dimensional texture for cirrus, implicit functions (metaballs) for altocumulus, and volume data for cumulus. Our method initially computes the intensity and the opacity of clouds for each pixel from an input photograph, stored as a cloud image. For cirrus, the cloud image is the output two-dimensional texture. For each of the other two types of cloud, three-dimensional density distributions are generated by referring to the cloud image. Since the method is very simple, the computational cost is low. Our method can generate, within several seconds, realistic clouds that are similar to those in the photograph.

The visual simulation of natural phenomena has been widely studied. Although several methods have been proposed to simulate melting, the flows of meltwater drops on the surfaces of objects are not taken into account. In this paper, we propose a particle-based method for the simulation of the melting and freezing of ice objects and the interactions between ice and fluids. To simulate the flow of meltwater on ice and the formation of water droplets, a simple interfacial tension is proposed, which can be easily incorporated into common particle-based simulation methods such as Smoothed Particle Hydrodynamics. The computations of heat transfer, the phase transition between ice and water, the interactions between ice and fluids, and the separation of ice due to melting are further accelerated by implementing our method using CUDA. We demonstrate our simulation and rendering method for depicting melting ice at interactive frame-rates.

In the field of computer graphics, simulation of fluids, including avalanches, is an important research topic. In this paper, we propose a method to simulate a kind of avalanche, mixed-motion avalanche, which is usually large and travels down the slope in fast speed, often resulting in impressive visual effects. The mixed-motion avalanche consists of snow smokes and liquefied snow which form an upper suspension layer and a lower dense-flow layer, respectively. The mixed-motion avalanche travels down the surface of the snow-covered mountain, which is called accumulated snow layer. We simulate a mixed-motion avalanche taking into account these three snow layers. We simulate the suspension layer using a grid-based approach, the denseflow and accumulated snow layer using a particle-based approach. An important contribution of our method is an interaction model between these snow layers that enables us to obtain the characteristic motions of avalanches, such as the generation of the snow smoke from the head of the avalanche.

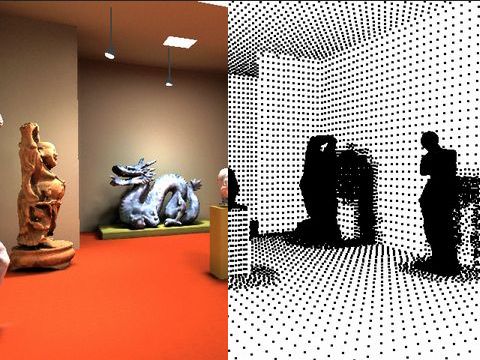

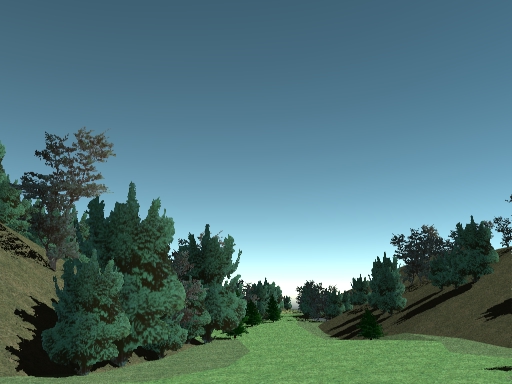

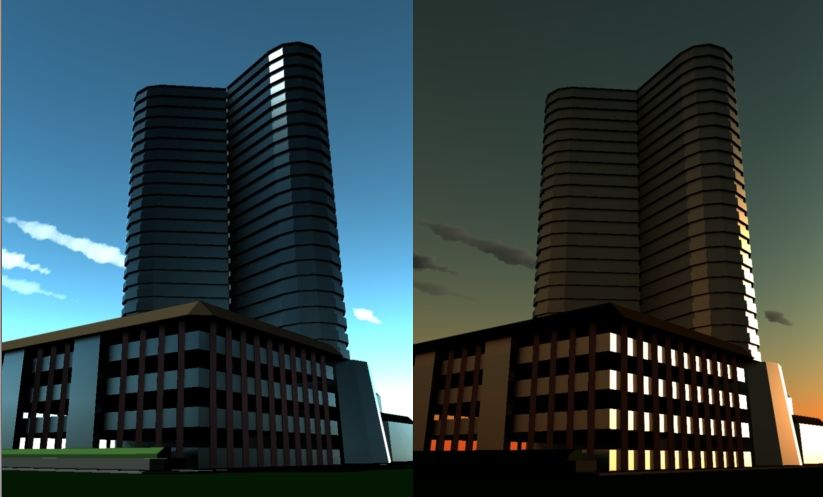

A rendering system for interior scenes is proposed in this paper. The light reaches the interior scene, usually through small regions, such as windows or abat-jours, which we call portals. To provide a solution, suitable for rendering interior scenes with portals, we extend the traditional precomputed radiance transfer approaches. In our approach, a bounding sphere, which we call a shell, of the interior, centered at each portal, is created and the light transferred from the shell towards the interior through the portal is precomputed. Each shell acts as an environment light source and its intensity distribution is determined by rendering images of the scene, viewed from the center of the shell. By updating the intensity distribution of the shell at each frame, we are able to handle dynamic objects outside the shells. The material of the portals can also be modified at run time (e.g. changing from transparent glass to frosted glass). Several applications are shown, including the illumination of a cathedral, lit by skylight at different times of a day, and a car, running in a town, at interactive frame rates, with a dynamic viewpoint.

Clouds play an important role for creating realistic images of outdoor scenes. In order to generate realistic clouds, many methods have been developed for modeling and animating clouds. One of the most effective approaches for synthesizing realistic clouds is to simulate cloud formation processes based on the atmospheric fluid dynamics. Although this approach can create realistic clouds, the resulting shapes and motion depend on many simulation parameters and the initial status. Therefore, it is very difficult to adjust those parameters so that the clouds form the desired shapes. This paper addresses this problem and presents a method for controlling the simulation of cloud formation. In this paper, we focus on controlling cumuliform cloud formation. The user specifies the overall shape of the clouds. Then, our method automatically adjusts parameters during the simulation in order to generate clouds forming the specified shape. Our method can generate realistic clouds while their shapes closely match to the desired shape.

Recently, many techniques using computational fluid dynamics have been proposed for the simulation of natural phenomena such as smoke and fire. Traditionally, a single grid is used for computing the motion of fluids. When an object interacts with a fluid, the resolution of the grid must be sufficiently high because the shape of the object is represented by a shape sampled at the grid points. This increases the number of grid points that are required, and hence the computational cost is increased. To address this problem, we propose a method using multiple grids that overlap with each other. In addition to a large single grid (a global grid) that covers the whole of the simulation space, separate grids (local grids) are generated that surround each object. The resolution of a local grid is higher than that of the global grid. The local grids move according to the motion of the objects. Therefore, the process of resampling the shape of the object is unnecessary when the object moves. To accelerate the computation, appropriate resolutions are adaptively-determined for the local grids according to their distance from the viewpoint. Furthermore, since we use regular (orthogonal) lattices for the grids, the method is suitable for GPU implementation. This realizes the real-time simulation of interactions between objects and smoke.

Fast rendering of dynamic scenes taking into account global illumination is one of the most challenging tasks in computer graphics. This paper proposes a new precomputed radiance transfer (PRT) method for rendering dynamic scenes of rigid objects taking into account interreflections of light between surfaces with diffuse and glossy BRDFs. To compute the interreflections of light between rigid objects, we consider the objects as secondary light sources. We represent the intensity distributions on the surface of the objects with a linear combination of basis functions. We then calculate a component of the irradiance per basis function at each vertex of the object when illuminated by the secondary light source. We call this component of the irradiance, the basis irradiance. The irradiance is represented with a linear combination of the basis irradiances, which are computed efficiently at run-time by using a PRT technique. By using the basis irradiance, the calculation of multiple-bounce interreflected light is simplified and can be evaluated very quickly. We demonstrate the real-time rendering of dynamic scenes for low-frequency lighting and rendering for all-frequency lighting at interactive frame rates.

Methods for rendering natural scenes are used in many applications such as virtual reality, computer games, and flight simulators. In this paper, we focus on the rendering of outdoor scenes that include clouds and lightning. In such scenes, the intensity at a point in the clouds has to be calculated by taking into account the illumination due to lightning. The multiple scattering of light inside clouds is an important factor when creating realistic images. However, the computation of multiple scattering is very time-consuming. To address this problem, this paper proposes a fast method for rendering clouds that are illuminated by lightning. The proposed method consists of two processes. First, basis intensities are prepared in a preprocess step. The basis intensities are the intensities at points in the clouds that are illuminated by a set of point light sources. In this precomputation, both the direct light and also indirect light (i.e., multiple scattering) are taken into account. In the rendering process, the intensities of clouds are calculated in real-time by using the weighted sum of the basis intensities. A further increase in speed is achieved by using a wavelet transformation. Our method achieves the real-time rendering of realistic clouds illuminated by lightning.

In this paper, we propose a fast global illumination solution for interactive lighting design. Using our method, light sources and the viewpoint are movable, and the characteristics of materials can be modified (assuming low-frequency BRDF) during rendering. Our solution is based on particle tracing (a variation of photon mapping) and final gathering. We assume that objects in the input scene are static, and pre-compute potential light paths for particle tracing and final gathering. To perform final gathering fast, we propose an efficient technique called Hierarchical Histogram Estimation for rapid estimation of radiances from the distribution of the particles. The rendering process of our method can be fully implemented on the GPU and our method achieves interactive frame rates for rendering scenes with even more than 100,000 triangles.

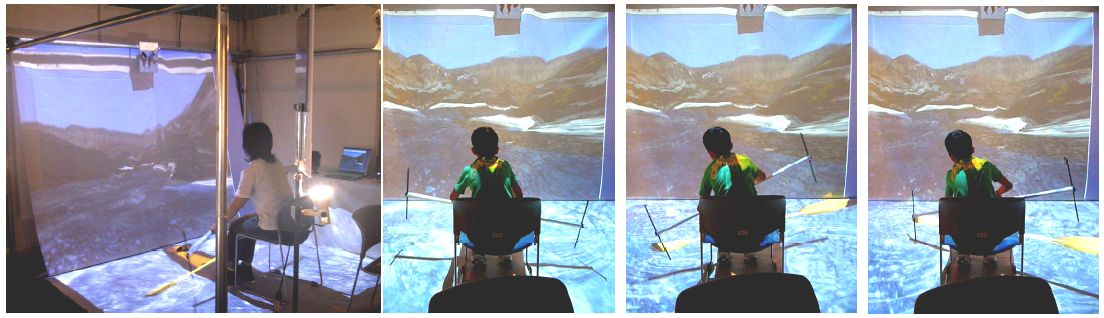

The key to enhancing perception of the virtual world is improving mechanisms for interacting with that world. Through providing a sense of touch, haptic rendering is one such mechanism. Many methods efficiently display force between rigid objects, but to achieve a truly realistic virtual environment, haptic interaction with fluids is also essential. In the field of computational fluid dynamics, researchers have developed methods to numerically estimate the resistance due to fluids by solving complex partial differential equations, called the Navier-Stokes equations. However, their estimation techniques, although numerically accurate, are prohibitively timeconsuming. This becomes a serious problem for haptic rendering, which requires a high frame rate. To address this issue, we developed a method for rapidly estimating and displaying forces acting on a rigid virtual object due to water. In this article, we provide an overview of our method together with its implementation and two applications: a lure-fishing simulator and a virtual canoe simulator.

Recently, simulation of natural phenomena has become one of the most important research topics in computer graphics and many methods to render water, smoke, clouds and flames have been developed. In this paper, we focus on the visual simulation of flames among these natural phenomena. First, we propose an interactive method to simulate flames. Our method allows the user to control the shape and motion of the flames. This is achieved by combining cellular automata and particle systems. Secondly, we propose a fast rendering method using wavelets for surrounding objects illuminated by the flames. The proposed method can take into account not only direct light but also indirect light. This method achieves fast calculation of dynamic intensity and shadows of objects illuminated by flames. Using the proposed method, realistic images of a scene including fire and flame can be generated in real-time.

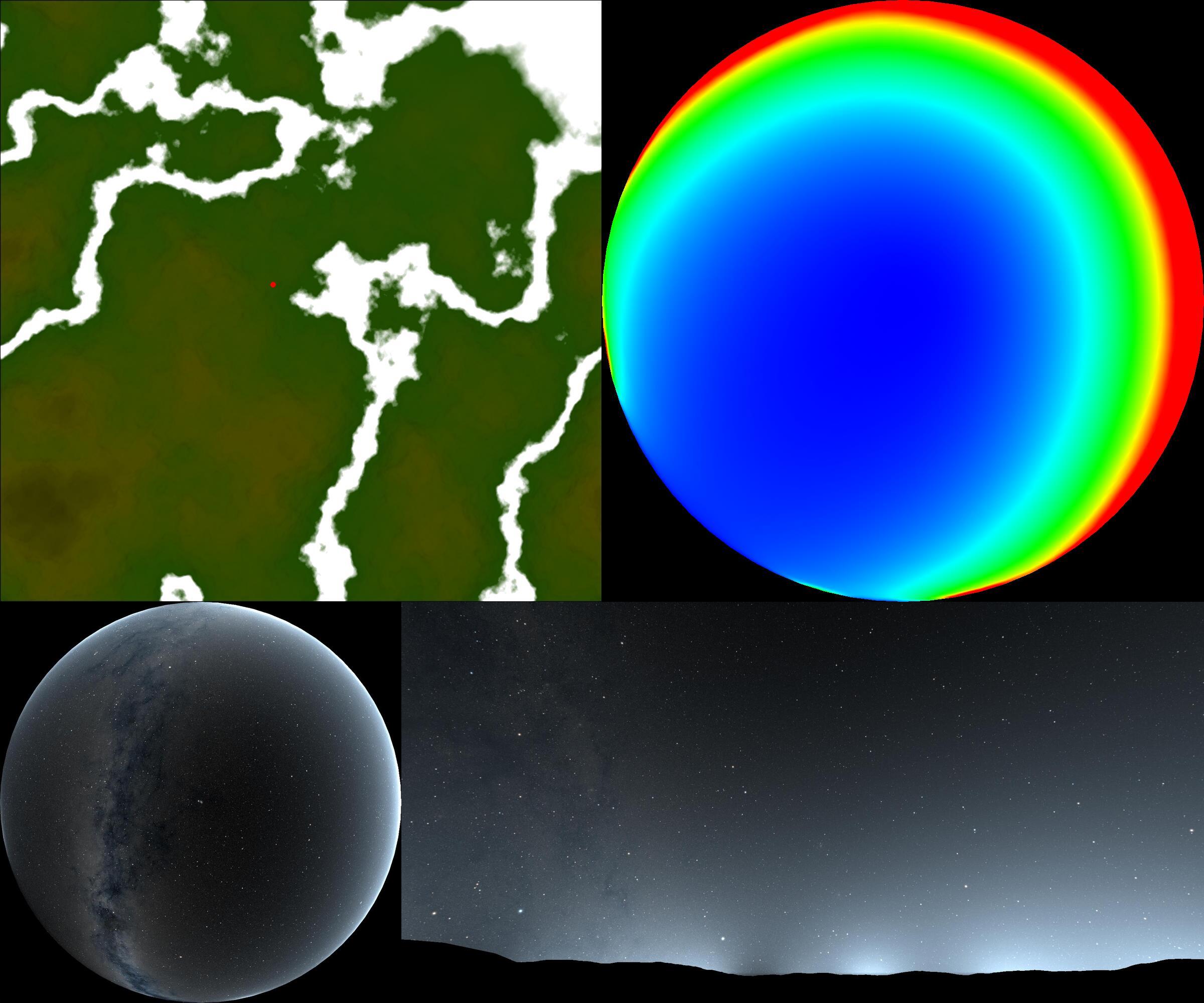

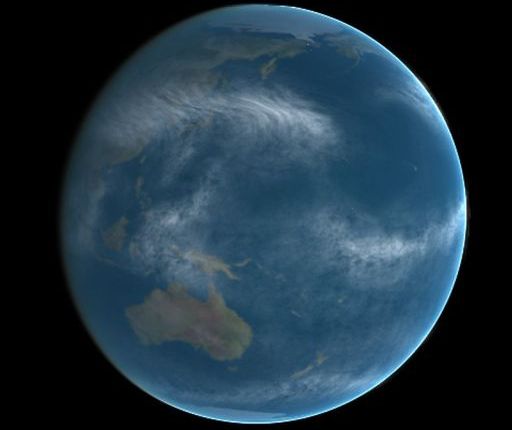

Computer generated images of the earth are often used for space flight simulators, computer games, movies, and so on. Clouds are indispensable to the creation of realistic images in these applications. This paper proposes a method for animating clouds surrounding the earth. The method allows the user to control the motion of clouds by specifying the center positions of high/low atmospheric pressure areas on the earth's surface. This data is used as input data and a three-dimensional velocity field is then calculated by solving Navier-Stokes equations. Water vapor is advected along the velocity field. Clouds are then generated due to the phase transition from water vapor to water droplets. We also propose an interactive system that enables the user to interactively control the simulation. The final photorealistic images are rendered by taking into account optical phenomena such as the scattering and absorption of light due to cloud particles.

Recently, techniques for realistic image synthesis using computer graphics have been widely used in many applications. The reality of the computer-generated images can be greatly improved by taking into account the reflections observed in mirrors, cars, glass walls of a building, etc. Therefore, there has been extensive research into rendering these reflections. However, the computational cost associated with reflections is generally very high because of the complexity. On the other hand, recent advancements in graphics hardware have encouraged researchers to develop hardware-accelerated methods for realistic image synthesis. This paper proposes such a fast method for rendering reflections using graphics hardware. The proposed method can render not only the ideal specular reflection such as from a mirror but also the glossy reflections such as those from a metal surface.

Recently, many researchers have paid attention to a technique called non-photorealistic rendering (NPR). In this paper, we propose a method for painterly rendering of water surface. The painterly images of water surface are efficiently generated by making use of techniques used in the field of three-dimensional computer graphics. Our method can create not only static images but also painterly animations of water such as a river. Moreover, we develop an interactive system using our method. This enables the user to reflect the user's mind on the resulting images.

In this paper, we propose a radiosity method for the point-sampled geometry to compute diffuse interreflection of light. Most traditional radiosity methods subdivide the surfaces of objects into small elements such as quadrilaterals. However, the point-sampled geometry includes no explicit information about surfaces, presenting a difficulty in applying the traditional approach to the point-sampled geometry. The proposed method addresses this problem by computing the interreflection without reconstructing any surfaces. The method realizes lighting simulations without losing the advantages of the point-sampled geometry.

Sound is an indispensable element for the simulation of a realistic virtual environment. Therefore, there has been much recent research focused on the simulation of realistic sound effects. This paper proposes a method for creating sound for turbulent phenomena such as fire. In a turbulent field, the complex motion of vortices leads to the generation of sound. This type of sound is called a vortex sound. The proposed method simulates a vortex sound by computing vorticity distributions using computational fluid dynamics. Sound textures for the vortex sound are first created in a pre-process step. The sound is then created at interactive rates by using these sound textures. The usefulness of the proposed method is demonstrated by applying it to the simulation of the sound of fire and other turbulent phenomena.

In computer graphics, most research focuses on creating images. However, there has been much recent work on the automatic generation of sound linked to objects in motion and the relative positions of receivers and sound sources. This paper proposes a new method for creating one type of sound called aerodynamic sound. Examples of aerodynamic sound include sound generated by swinging swords or by wind blowing. A major source of aerodynamic sound is vortices generated in fluids such as air. First, we propose a method for creating sound textures for aerodynamic sound by making use of computational fluid dynamics. Next, we propose a method using the sound textures for real-time rendering of aerodynamic sound according to the motion of objects or wind velocity.

In order to synthesize realistic images of scenes that include water surfaces, the rendering of optical effects caused by waves on the water surface, such as caustics and reflection, is necessary. However, rendering caustics is quite complex and time-consuming. In recent years, the performance of graphics hardware has made significant progress. This fact encourages researchers to study the acceleration of realistic image synthesis. We present here a method for the fast rendering of refractive and reflective caustics due to water surfaces. In the proposed method, an object is expressed by a set of texture mapped slices. We calculate the intensities of the caustics on the object by using the slices and store the intensities as textures. This makes it possible to render caustics at interactive rate by using graphics hardware. Moreover, we render objects that are reflected and refracted due to the water surface by using reflection/refraction mapping of these slices.

To create realistic images using computer graphics, an important element to consider is atmospheric scattering, that is, the phenomenon by which light is scattered by small particles in the air. This effect is the cause of the light beams produced by spotlights, shafts of light, foggy scenes, the bluish appearance of the earth�fs atmosphere, and so on. This paper proposes a fast method for rendering the atmospheric scattering effects based on actual physical phenomena. In the proposed method, look-up tables are prepared to store the intensities of the scattered light, and these are then used as textures. Realistic images are then created at interactive rates by making use of graphics hardware.

Simulation of natural phenomena is one of the important research fields in computer graphics. In particular, clouds play an important role in creating images of outdoor scenes. Fluid simulation is effective in creating realistic clouds because clouds are the visualization of atmospheric fluid. In this paper, we propose a simulation technique, based on a numerical solution of the partial differential equation of the atmospheric fluid model, for creating animated cumulus and cumulonimbus clouds with features formed by turbulent vortices.

We propose a non-photorealistic rendering method that creates an artistic effect called mosaicing. The proposed method converts images provided by the user into the mosaic images. Commercial image editing applications also provide a similar function. However, these applications often trade results for low-cost computing. It is desirable to create high quality images even if the computational cost is increased. We present an automatic method for mosaicing images by using Voronoi diagrams. The Voronoi diagrams are optimized so that the error between the original image and the resulting image is as small as possible. Next, the mosaic image is generated by using the sites and edges of the Voronoi diagram. We use graphics hardware to efficiently generate Voronoi diagrams. Furthermore, we extend the method to mosaic animations from sequences of images.

Many researchers have been studying computer graphics simulations of natural phenomena. One important area in these studies is the animation of water droplets, whose applications include drive simulators. Because of the complexity of shape and motion, the animation of water droplets requires long calculation time. This paper proposes a method for real-time animations of water droplets running down on a glass plate, using graphics hardware. The proposed method takes into account depth of field effects, and makes it possible to change the focal point interactively depending on a point in the center of the scene being observed.

A number of methods have been developed for creating realistic images of natural scenes. Their applications include flight simulators, the visual assessment of outdoor scenery, etc. Previously, many of these methods have focused on creating images under clear or slightly cloudy days. Simulations under bad weather conditions, however, are one of the important issues for realism. Lightning is one of the essential elements for these types of simulations. This paper proposes an efficient method for creating realistic images of scenes including lightning. Our method can create photo-realistic images by taking into account the scattering effects due to clouds and atmospheric particles illuminated by lightning. Moreover, graphics hardware is utilized to accelerate the image generation. The usefulness of our method is demonstrated by creating images of outdoor scenes that include lightning.

The display of realistic natural scenes is one of the most important research areas in computer graphics. The rendering of water is one of the essential components. This paper proposes an efficient method for rendering images of scenes within water. For underwater scenery, the shafts of light and caustics are attractive and important elements. However, computing these effects is difficult and time-consuming since light refracts when passing through waves. To address the problem, our method makes use of graphics hardware to accelerate the computation. Our method displays the shafts of light by accumulating the intensities of streaks of light by using hardware color blending functions. The rendering of caustics is accelerated by making use of a Z-buffer and a stencil buffer. Moreover, by using a shadow mapping technique, our method can display shafts of light and caustics taking account of shadows due to objects.

The simulation of natural phenomena such as clouds, smoke, fire and water is one of the most important research areas in computer graphics. In particular, clouds play an important role in creating images of outdoor scenes. The proposed method is based on the physical simulation of atmospheric fluid dynamics which characterizes the shape of clouds. To take account of the dynamics, we used a method called the coupled map lattice (CML). CML is an extended method of cellular automaton and is computationally inexpensive. The proposed method can create various types of clouds and can also realize the animation of these clouds. Moreover, we have developed an interactive system for modeling various types of clouds.

This paper proposes a simple and computationally inexpensive method for animation of clouds. The cloud evolution is simulated using cellular automaton that simplifies the dynamics of cloud formation. The dynamics are expressed by several simple transition rules and their complex motion can be simulated with a small amount of computation. Realistic images are then created using one of the standard graphics APIs, OpenGL. This makes it possible to utilize graphics hardware, resulting in fast image generation. The proposed method can realize the realistic motion of clouds, shadows cast on the ground, and shafts of light through clouds.

Recently, computer graphics have been used to simulate natural phenomena, such as clouds, fire, and ocean waves. This paper focuses on the evolution of clouds and proposes a simulation method for dynamic clouds. The method makes use of the cellular automaton for calculating the density distribution of clouds which varies over time. By using the cellular automaton, the distribution can be obtained with only a small amount of computation since the dynamics of clouds are expressed by several simple transition rules. The proposed method is applied to animations of outdoor scenes to demonstrate its usefulness.

This paper proposes an image-based modeling of clouds where realistic clouds are created from satellite images using metaballs. The intention of the paper is for applications to space flight simulators, the visualization of the weather information, and the simulation of surveys of the earth. In the proposed method, the density distribution inside the clouds is defined by a set of metaballs. Parameters of metaballs, such as center positions, radii, and density values, are automatically determined so that a synthesized image of clouds modeled by using metaballs is similar to the original satellite image. We also propose an animation method for clouds generated by a sequence of satellite images taken at some interval. The usefulness of the proposed method is demonstrated by several examples of clouds generated from satellite images of typhoons passing through Japan.

Computer graphics has become an useful tool for interior lighting design. Using computer graphics, we can evaluate lighting effects visually in advance. However, the expensive computational cost for intensity calculation makes it difficult to interactively design the lighting effects, especially when taking into account interreflections of light. We propose an interactive system with forward and inverse lighting design approaches. For the fast forward solution, we employ a precomputation-based method. For the inverse solution, we employ genetic algorithms. Our system allows the user to design the lighting effects interactively and intuitively.

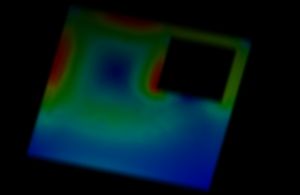

Animation of a time-varying 3-D scalar field distribution requires generation of a set of images at a sampled time intervals i.e. frames. Although, volume rendering method can be very advantageous for such 3-D scalar field visualizations, in case of animation, the computation time needed for generation of the entire set of images can be considerably long. To address this problem, this paper proposes a fast volume rendering method which utilizes orthonormal wavelets. The coherency between frames, in the proposed method, is eliminated by expanding the scalar field into a serial of wavelets. Application of the proposed method for time-varying eddy-current density distribution inside an aluminum plate (TEAM Workshop Problem~$7$) is given.}

Animation of a time-varying 3-D scalar field distribution requires generation of a set of images at a sampled time intervals i.e. frames. Although, volume rendering method can be very advantageous for such 3-D scalar field visualizations, in case of animation, the computation time needed for generation of the entire set of images can be considerably long. To address this problem, this paper proposes a fast volume rendering method which utilizes orthonormal wavelets. The coherency between frames, in the proposed method, is eliminated by expanding the scalar field into a serial of wavelets. Application of the proposed method for time-varying eddy-current density distribution inside an aluminum plate (TEAM Workshop Problem~$7$) is given.}

Recently, computer graphics has been often used for both architectural design and visual environmental assessment. Using computer graphics, designers can easily compare the effect of the natural light on their architectural design under various conditions by changing time in a day, seasons, or atmospheric conditions (clear or overcast sky). In traditional methods of calculating the illuminance due to sky light, however, all calculation must be performed from scratch if such conditions are changed. Therefore, to compare the architectural designs under different conditions, a great deal of time has to be spent on generating the images. This paper proposes a method of quickly generating images of an outdoor scene by expressing the illuminance due to sky light with a series of basis functions, even if the luminous intensity distribution of the sky is changed.

When designing interior lighting effects, it is desirable to compare a variety of lighting designs involving different lighting devices and directions of light. It is, however, time-consuming to generate images with many different lighting parameters, taking interreflection into account, because all luminances must be calculated and recalculated. This makes it difficult to design lighting effects interactively. To address this problem, this paper proposes a method of quickly generating images of a given scene illustrating an interreflective environment illuminated by sources with arbitrary luminous intensity distributions. In the proposed method, the luminous intensity ditribution is expressed with basis functions. The proposed method uses a series of spherical harmonic functions as basis functions, and calculates in advance each intensity on surfaces lit by the light sources whose luminous intensity distribution are the same as the spherical harmonic functions. The proposed method makes it possible to generate images so quickly that we can change the luminous intensity distribution interactively. Combining the proposed method with an interactive walk-through that employs intensity mapping, an interactive system for lighting design is implemented. The usefulness of the proposed method is demonstrated by its application to interactive lighting design, where many images are generated by altering lighting devices and/or direction of light.

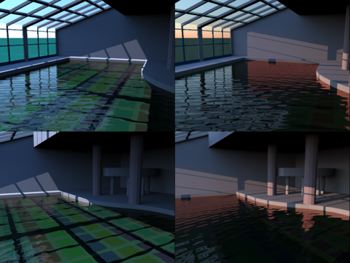

It is inevitable for indoor lighting design to render a room lit by natural light, especially for an atelier or an indoor pool where there are many windows. This paper proposes a method for calculating the illuminance due to natural light, i.e. direct sunlight and skylight, passing through transparent planes such as window glass. The proposed method makes it possible to efficiently calculate such illuminance accurately, because it takes into account both non-uniform luminous intensity distribution of skylight and the distribution of transparency of glass according to incident angles of light. Several examples including the lighting design in an indoor pool, are shown to demonstrate the usefulness of proposed method.